Meta demonstrates an AI system designed to give people more control over their AI art. Systems like this could also be a tool to help build the Metaverse.

AI-generated images have been making their rounds on the web for years. While a few years ago it was primarily GAN-based systems that generated deceptively real cats or people, today it is multimodal models that allow targeted creations via text input. Users of DALL-E 2, Midjourney and Craiyon (formerly DALL-E mini) are flooding Twitter, Instagram and other channels with AI images.

However, concrete control over these systems is often quite limited: Enter text and wait for images - that's it. With some systems, variants of interesting results can be generated. The creativity of DALL-E can also be directed specifically to individual places in an existing image to change this section. Then, for example, a flamingo appears in what is actually an empty pool.

Meta experiments with text plus sketch

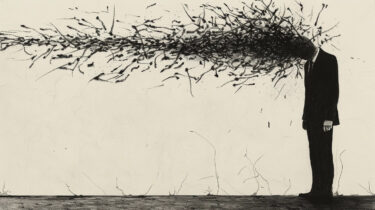

In a new paper, Meta researchers now demonstrate a multimodal AI system that enables more targeted image generation. Users can feed "Make-A-Scene" a sketch and then start the generation process by entering additional text.

While with other AI systems the results are often difficult to predict, Make-A-Scene allows people to target their ideas more precisely, Meta writes.

Video: Meta

This requires defining the basic scene layout in the sketch. The text input then fills the sketch with AI-generated graphics. The model can also create its own layouts via text input - however, users lose some control in this way.

Meta sees Make-A-Scene as a step toward more targeted AI creation

According to Meta, some artists have been given test access to Make-A-Scene. One of the developers has tested the system with his children, for example, to make monster bears ride on trains. There are no plans to release it for now: For Meta, Make-A-Scene is an experiment in AI creativity that focuses on user control.

To harness the potential of AI to foster creative expression, humans must be able to shape and control the content generated by the system, Meta writes. To do that, a suitable system must be intuitive and easy to use - using speech, text, sketches, gestures or even eye movements.

Thanks to AI support, Meta hopes to create a new class of creative tools that will allow many people to create expressive messages in 2D, XR and virtual worlds.