A deepfake study investigates how reliably people recognize fakes. Here's how you can test yourself.

Artificial Intelligence automates and simplifies the creation of fake texts, fake audios and manipulated videos. The necessary tools and methods are readily available and improving constantly. Experts have therefore long warned against the possible misuse of these technologies for political influence.

Whether and how such influence might work depends largely on people's ability to detect AI fakes. A new study now examines how easily different modalities, such as video, audio and text, can fool people.

Fake benchmark with political deepfakes

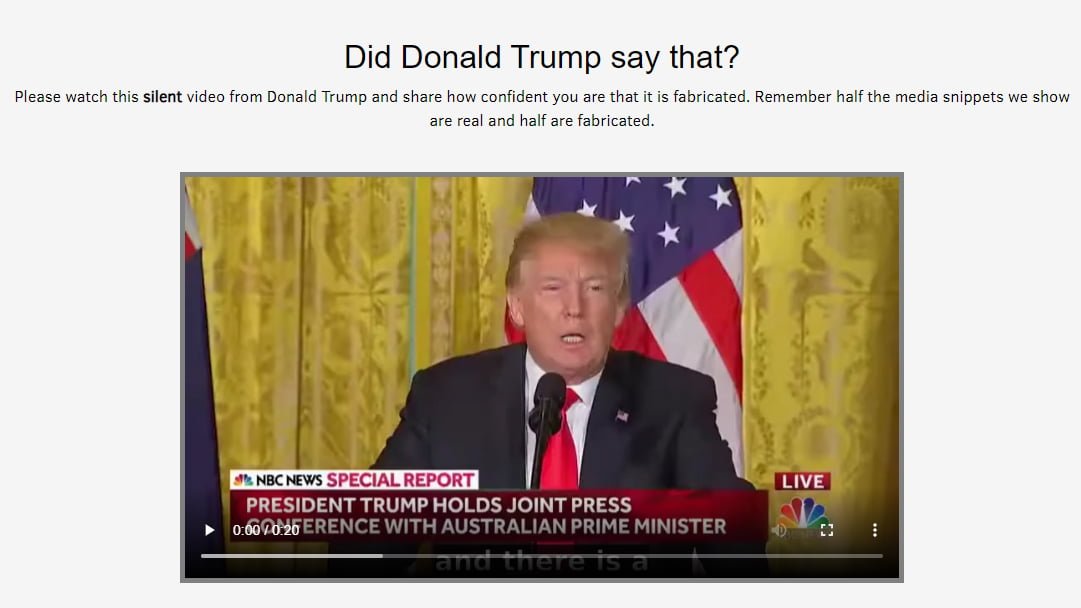

For their study, MIT AI researchers recruited nearly 500 participants to rate the authenticity of video, audio and text excerpts of Donald Trump and Joe Biden on a website.

In addition, more than 5,000 people visited the corresponding website and also participated in the deepfake test. All participants knew that half of the media shown was manipulated.

The content came from the Presidential Deepfake Dataset and included 32 videos of speeches by the two politicians - half real, half fake. The MIT researchers also made additional modifications to the videos, reducing the resolution and replacing audio tracks or text.

The approximately 5,700 participants gave a total of 6,792 ratings on the authenticity of the media. According to the researchers, they relied more on how something was said, i.e. the audiovisual cues, than on the actual content of the speech.

However, in cases where the content clearly contradicted public perceptions of politicians' beliefs, participants relied less on visual cues, the researchers found.

Less complex fakes are better fakes

Two trends emerged in the study: first, the more modalities (audio, video, text) a fake has, the easier it is for people to recognize it as a fake. And second, the technology for audio fakes does not yet match that for visual deepfakes.

For example, participants were worse at recognizing fake text than fake video with a transcript or fake video with an audio track. Fake audio tracks were also recognized as fakes more often than fake videos.

A short intro video prepares participants of the study for the possibilities of deepfake technology. | Video: MIT

Humans could detect visual inconsistencies created by lip-syncing Deepfake manipulations, the paper says. However, the accuracy for such videos without an audio track is only 64 percent. The accuracy increases to 66 percent when subtitles are added. The accuracy for videos with text and audio, on the other hand, averages 82 percent.

According to the researchers, ratings generally include perceptual cues from video and audio, as well as considerations of content, shaped by the expectations participants have of the speaker. However, when evaluating content alone - i.e., pure text - accuracy is only slightly above chance, averaging 57 percent.

Deepfake detection: Is more media literacy a solution?

A deepfake with audio is therefore detected by a large proportion of participants - but they specifically search for fakes in the experiment and know that half of all content is fake.

The quality of the audio and video fakes used in the experiment also does not reach the quality of the current best deepfakes. The figures could therefore look significantly different in a different context - for example, on a social media platform and with higher-quality videos.

The researchers therefore suggest media literacy programs to encourage reflective thinking and content moderation systems that provide explanations to alert people to questionable content and clues such as image artifacts, semantic errors, or biometric features.

You can try out the Deepfake test on MIT's Detect Fakes website.

A recent study on the credibility of deepfake portraits shows that people can hardly tell apart AI-faked photos and original photos - even if they know they are looking for fakes. What's more, they attribute higher trustworthiness to AI-generated faces.