Neural Rendering for Humans: HumanNeRF synthesizes 3D views of people from a simple YouTube video.

Neural rendering methods promise to augment or even replace time-honored methods of 3D rendering with artificial intelligence. One example is the so-called Neural Radiance Fields (NeRFs), small neural networks that can learn 3D representations from 2D photos and then render them.

The technology has been producing increasingly realistic images since its invention. Some variants can now learn and render complex 3D representations in a matter of seconds. At this year's GTC, for example, Nvidia gave insights into Instant NeRF, a method that is up to 1000 times faster than older methods.

According to David Luebke, vice president of graphics research at Nvidia, NeRFs are comparable to JPEG compression for 2D photography. He explained that if traditional 3D representations like polygon meshes are comparable to vector images, NeRFs are like bitmap images. They capture how light radiates from an object or within a scene.

Luebke says this enables a huge increase in speed, simplicity and reach when capturing and sharing 3D content.

Google deploys NeRFs for Immersive View with Google Maps

The pioneer of NeRF development is Google. The company developed NeRFs together with scientists at UC Berkeley and UC San Diego. Since then, Google has shown AI-rendered street blocks that enable a kind of Street View 3D, and photorealistic 3D renderings of real-world objects thanks to Mip-NeRF 360.

At this year's I/O developer conference, Google showed Immersive View, a synthesized 3D perspective of major cities and individual interior views such as restaurants, also based on neural rendering.

Video: Google

Now, researchers at the University of Washington and Google demonstrate how NeRFs can render people in 3D.

NeRFs for people: Movement and clothing have been a challenge - until now

The new HumanNeRF method solves two problems in representing people with NeRFs: Until now, the networks have primarily worked with static objects and relied on camera shots from multiple angles.

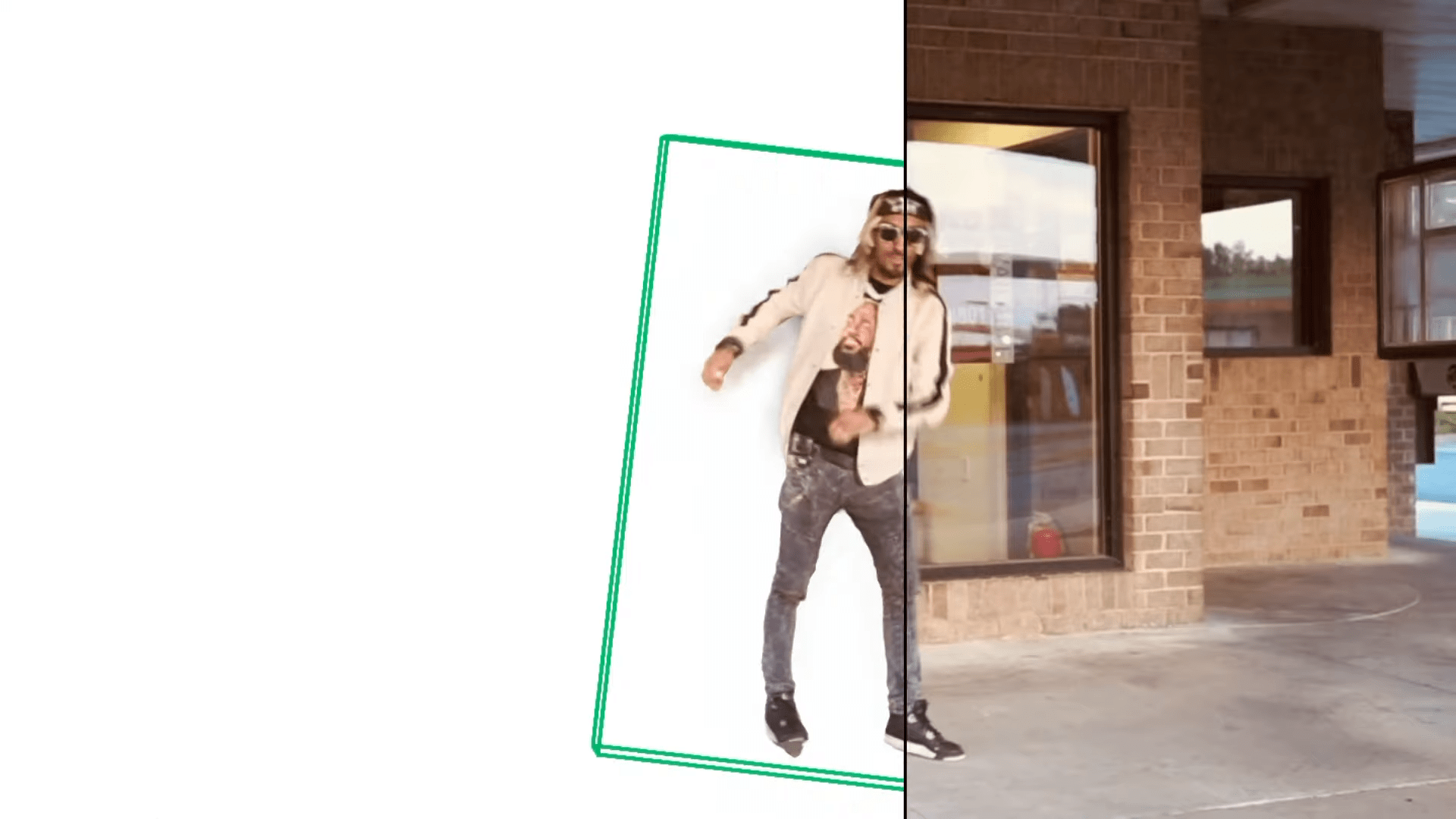

HumanNeRF, by contrast, can render moving people, including the movements of their clothing, from previously unseen angles - with training material from a single camera perspective. This means that the NeRFs can also be trained with a YouTube video in which, for example, a dancing person is filmed head-on.

Video: Weng et al. | University of Washington | Google

HumanNeRF relies on multiple networks that capture a canonical representation of the person in a so-called T-pose, as well as a so-called motion field that learns a rigid skeletal motion and non-rigid motions such as clothing. The pose of the filmed person is additionally captured with a simple pose estimation network.

The learned information of the motion field and pose estimation can then modify the learned canonical representation according to the pose shown in the video and then render it from NeRF.

For Google, HumanNeRF is just the beginning

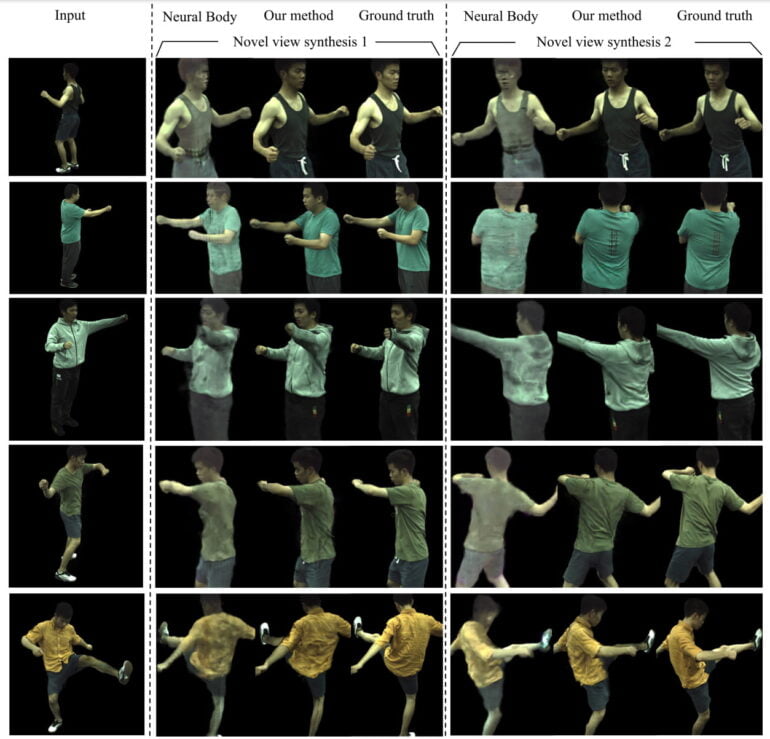

The method thus enables much more realistic 3D renderings than previous methods: The rendered people are more detailed and movements in clothing are clearly visible.

In several examples, the researchers show that a single camera angle is sufficient for 3D rendering - so use in the wild is possible, for example for YouTube videos.

HumanNeRF can also render the complete learned scene from the directly opposite viewpoint after training - this is particularly challenging since not a single rendered pixel was ever visible during training.

As limitations, the researchers cite missing details and a perceptible jerkiness during the transition between different poses, since the temporal coherence in the motion field is not considered.

The technological progress also has its price: The training required 72 hours on four GeForce RTX 2080 Ti GPUs. However, the team points to findings like Nvidia's Instant-NGP, which drastically reduces the required computing power for NeRFs and other neural rendering methods.

So with some improvements and lower computational requirements, the technology could reach end users eventually and provide Google with another building block for the AR future that was clearly drawn at this year's I/O.